Dask Read Csv

Dask Read Csv - Web you could run it using dask's chunking and maybe get a speedup is you do the printing in the workers which read the data: It supports loading many files at once using globstrings: In this example we read and write data with the popular csv and. Df = dd.read_csv(.) # function to. Web dask dataframes can read and store data in many of the same formats as pandas dataframes. >>> df = dd.read_csv('myfiles.*.csv') in some cases it can break up large files: Web read csv files into a dask.dataframe this parallelizes the pandas.read_csv () function in the following ways: Web typically this is done by prepending a protocol like s3:// to paths used in common data access functions like dd.read_csv: List of lists of delayed values of bytes the lists of bytestrings where each.

Web read csv files into a dask.dataframe this parallelizes the pandas.read_csv () function in the following ways: >>> df = dd.read_csv('myfiles.*.csv') in some cases it can break up large files: Web dask dataframes can read and store data in many of the same formats as pandas dataframes. Web you could run it using dask's chunking and maybe get a speedup is you do the printing in the workers which read the data: Df = dd.read_csv(.) # function to. It supports loading many files at once using globstrings: In this example we read and write data with the popular csv and. List of lists of delayed values of bytes the lists of bytestrings where each. Web typically this is done by prepending a protocol like s3:// to paths used in common data access functions like dd.read_csv:

Web typically this is done by prepending a protocol like s3:// to paths used in common data access functions like dd.read_csv: In this example we read and write data with the popular csv and. Df = dd.read_csv(.) # function to. List of lists of delayed values of bytes the lists of bytestrings where each. It supports loading many files at once using globstrings: >>> df = dd.read_csv('myfiles.*.csv') in some cases it can break up large files: Web dask dataframes can read and store data in many of the same formats as pandas dataframes. Web read csv files into a dask.dataframe this parallelizes the pandas.read_csv () function in the following ways: Web you could run it using dask's chunking and maybe get a speedup is you do the printing in the workers which read the data:

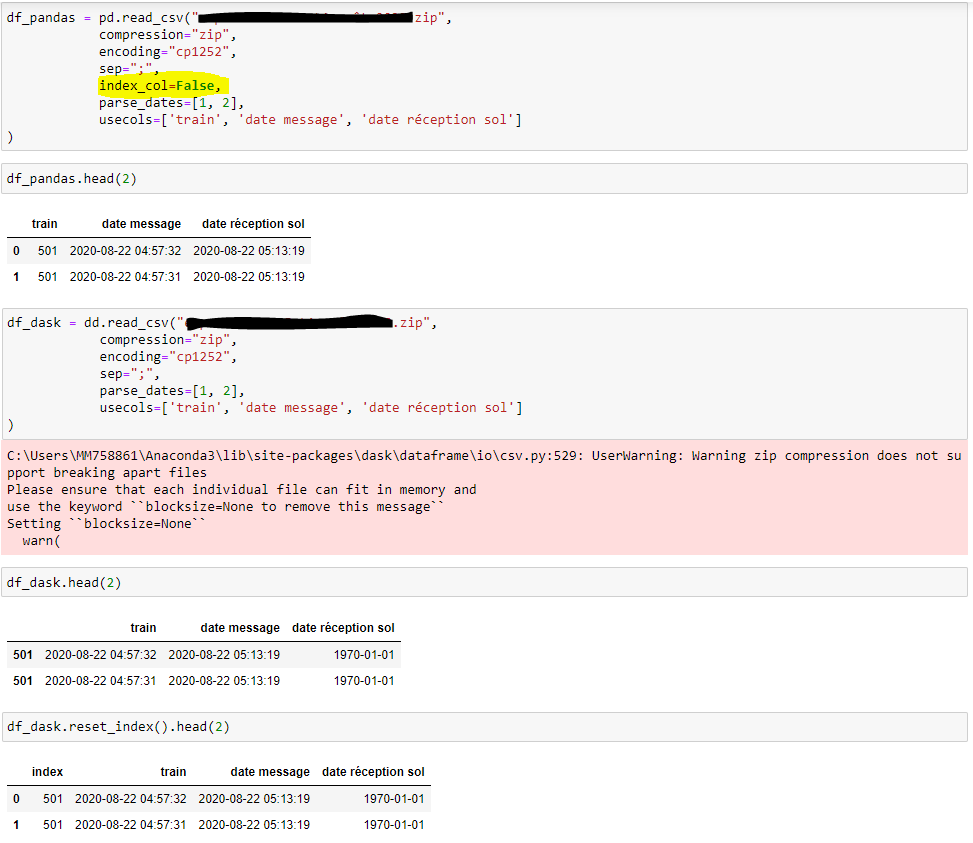

pandas.read_csv(index_col=False) with dask ? index problem Dask

Web you could run it using dask's chunking and maybe get a speedup is you do the printing in the workers which read the data: >>> df = dd.read_csv('myfiles.*.csv') in some cases it can break up large files: Web read csv files into a dask.dataframe this parallelizes the pandas.read_csv () function in the following ways: Df = dd.read_csv(.) # function.

Reading CSV files into Dask DataFrames with read_csv

List of lists of delayed values of bytes the lists of bytestrings where each. Web dask dataframes can read and store data in many of the same formats as pandas dataframes. Web you could run it using dask's chunking and maybe get a speedup is you do the printing in the workers which read the data: Web read csv files.

READ CSV in R 📁 (IMPORT CSV FILES in R) [with several EXAMPLES]

In this example we read and write data with the popular csv and. Df = dd.read_csv(.) # function to. List of lists of delayed values of bytes the lists of bytestrings where each. Web read csv files into a dask.dataframe this parallelizes the pandas.read_csv () function in the following ways: It supports loading many files at once using globstrings:

Dask Read Parquet Files into DataFrames with read_parquet

In this example we read and write data with the popular csv and. >>> df = dd.read_csv('myfiles.*.csv') in some cases it can break up large files: Web you could run it using dask's chunking and maybe get a speedup is you do the printing in the workers which read the data: List of lists of delayed values of bytes the.

Reading CSV files into Dask DataFrames with read_csv

In this example we read and write data with the popular csv and. Df = dd.read_csv(.) # function to. List of lists of delayed values of bytes the lists of bytestrings where each. Web you could run it using dask's chunking and maybe get a speedup is you do the printing in the workers which read the data: Web read.

dask.dataframe.read_csv() raises FileNotFoundError with HTTP file

Df = dd.read_csv(.) # function to. Web you could run it using dask's chunking and maybe get a speedup is you do the printing in the workers which read the data: >>> df = dd.read_csv('myfiles.*.csv') in some cases it can break up large files: In this example we read and write data with the popular csv and. Web typically this.

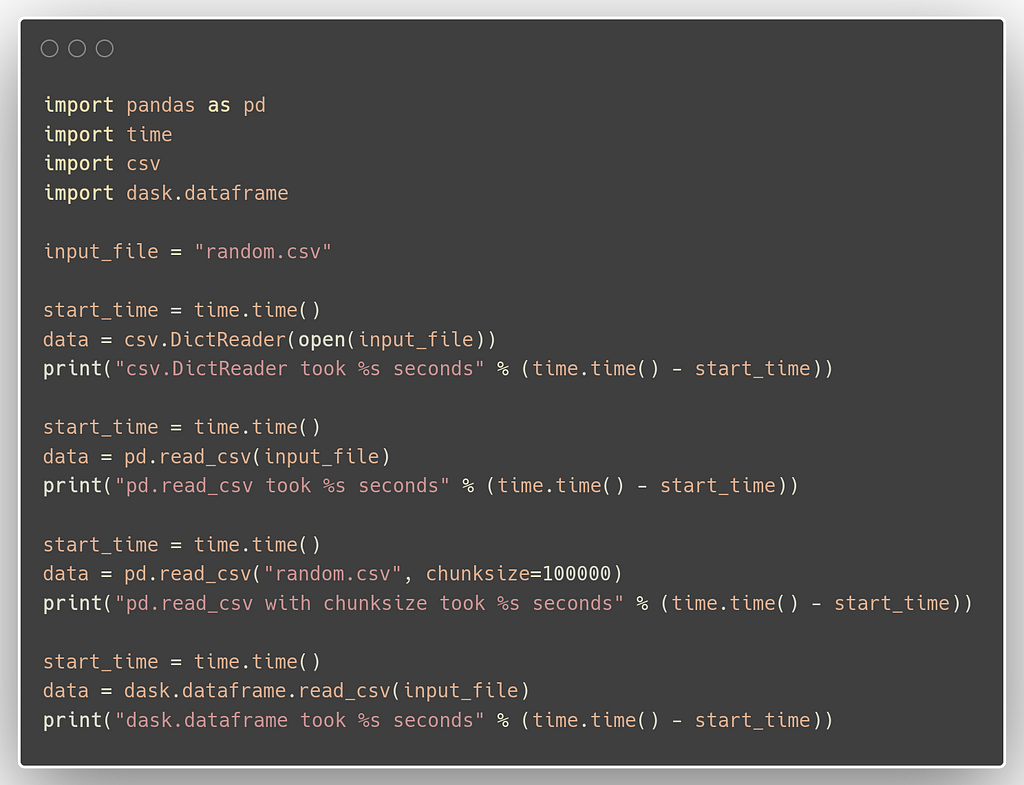

Best (fastest) ways to import CSV files in python for production

>>> df = dd.read_csv('myfiles.*.csv') in some cases it can break up large files: Df = dd.read_csv(.) # function to. Web you could run it using dask's chunking and maybe get a speedup is you do the printing in the workers which read the data: List of lists of delayed values of bytes the lists of bytestrings where each. Web read.

dask Keep original filenames in dask.dataframe.read_csv

Web dask dataframes can read and store data in many of the same formats as pandas dataframes. Web typically this is done by prepending a protocol like s3:// to paths used in common data access functions like dd.read_csv: In this example we read and write data with the popular csv and. Web you could run it using dask's chunking and.

How to Read CSV file in Java TechVidvan

Web dask dataframes can read and store data in many of the same formats as pandas dataframes. >>> df = dd.read_csv('myfiles.*.csv') in some cases it can break up large files: Web you could run it using dask's chunking and maybe get a speedup is you do the printing in the workers which read the data: Web typically this is done.

[Solved] How to read a compressed (gz) CSV file into a 9to5Answer

List of lists of delayed values of bytes the lists of bytestrings where each. Web read csv files into a dask.dataframe this parallelizes the pandas.read_csv () function in the following ways: Df = dd.read_csv(.) # function to. Web you could run it using dask's chunking and maybe get a speedup is you do the printing in the workers which read.

>>> Df = Dd.read_Csv('Myfiles.*.Csv') In Some Cases It Can Break Up Large Files:

In this example we read and write data with the popular csv and. It supports loading many files at once using globstrings: Web you could run it using dask's chunking and maybe get a speedup is you do the printing in the workers which read the data: Web read csv files into a dask.dataframe this parallelizes the pandas.read_csv () function in the following ways:

Web Dask Dataframes Can Read And Store Data In Many Of The Same Formats As Pandas Dataframes.

Web typically this is done by prepending a protocol like s3:// to paths used in common data access functions like dd.read_csv: List of lists of delayed values of bytes the lists of bytestrings where each. Df = dd.read_csv(.) # function to.

![READ CSV in R 📁 (IMPORT CSV FILES in R) [with several EXAMPLES]](https://r-coder.com/wp-content/uploads/2020/05/read-csv-r.png)