Read From Bigquery Apache Beam

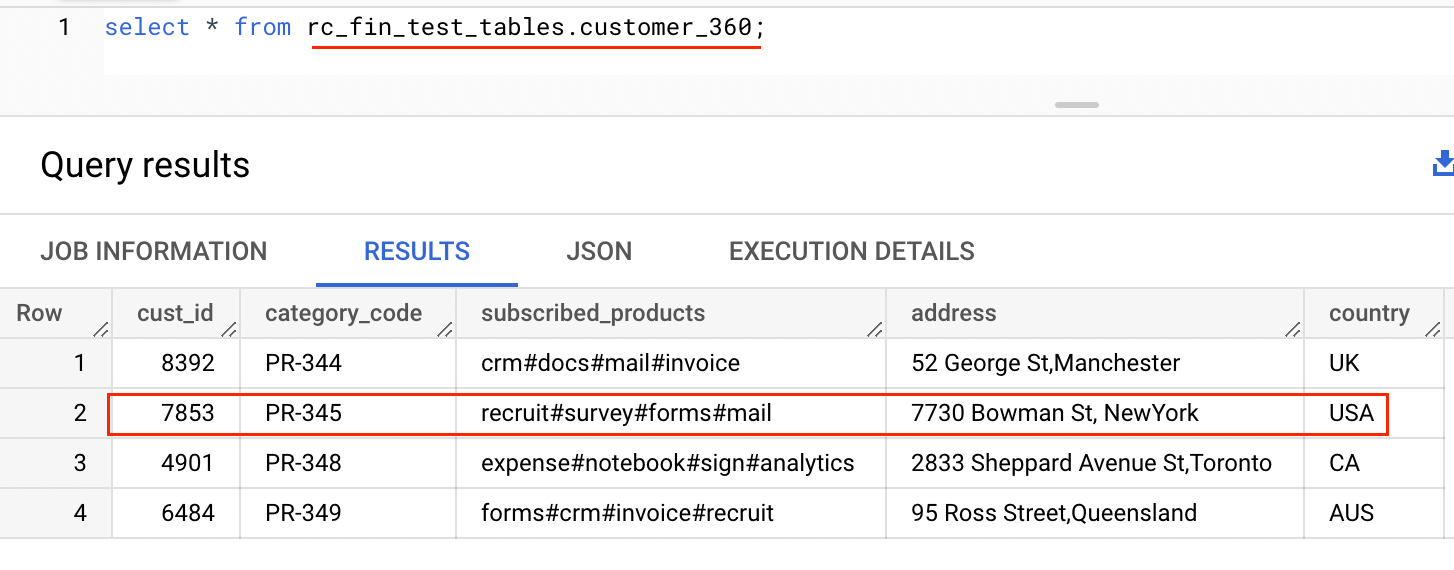

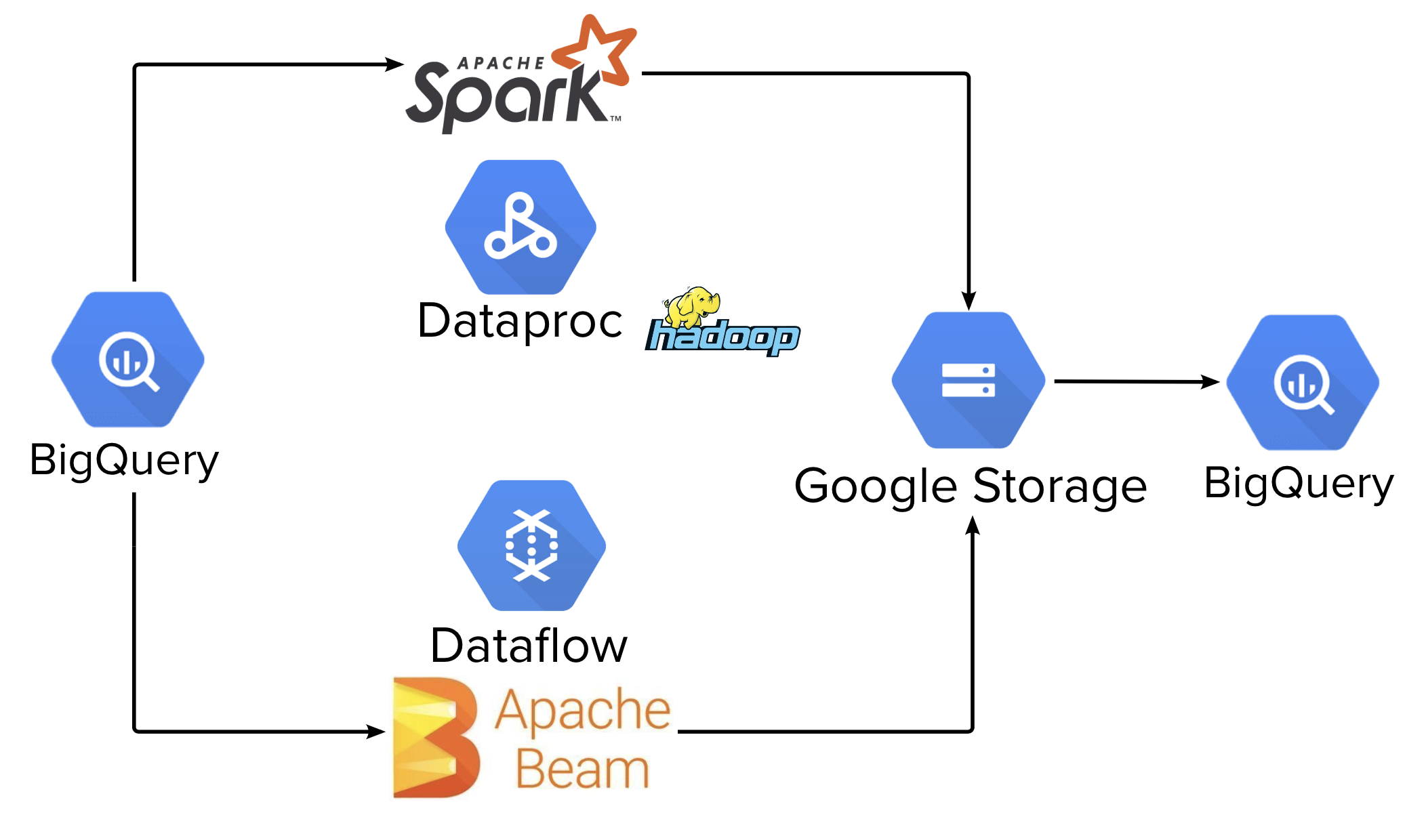

Read From Bigquery Apache Beam - Web i'm trying to set up an apache beam pipeline that reads from kafka and writes to bigquery using apache beam. I am new to apache beam. Can anyone please help me with my sample code below which tries to read json data using apache beam: Web read csv and write to bigquery from apache beam. I'm using the logic from here to filter out some coordinates: To read an entire bigquery table, use the table parameter with the bigquery table. Web this tutorial uses the pub/sub topic to bigquery template to create and run a dataflow template job using the google cloud console or google cloud cli. Similarly a write transform to a bigquerysink accepts pcollections of dictionaries. Web for example, beam.io.read(beam.io.bigquerysource(table_spec)). Web read files from multiple folders in apache beam and map outputs to filenames.

Web the runner may use some caching techniques to share the side inputs between calls in order to avoid excessive reading::: Web this tutorial uses the pub/sub topic to bigquery template to create and run a dataflow template job using the google cloud console or google cloud cli. As per our requirement i need to pass a json file containing five to 10 json records as input and read this json data from the file line by line and store into bigquery. In this blog we will. I initially started off the journey with the apache beam solution for bigquery via its google bigquery i/o connector. Public abstract static class bigqueryio.read extends ptransform < pbegin, pcollection < tablerow >>. To read an entire bigquery table, use the table parameter with the bigquery table. The following graphs show various metrics when reading from and writing to bigquery. Can anyone please help me with my sample code below which tries to read json data using apache beam: Similarly a write transform to a bigquerysink accepts pcollections of dictionaries.

Web apache beam bigquery python i/o. Web this tutorial uses the pub/sub topic to bigquery template to create and run a dataflow template job using the google cloud console or google cloud cli. Main_table = pipeline | 'verybig' >> beam.io.readfrobigquery(.) side_table =. How to output the data from apache beam to google bigquery. I'm using the logic from here to filter out some coordinates: Web i'm trying to set up an apache beam pipeline that reads from kafka and writes to bigquery using apache beam. As per our requirement i need to pass a json file containing five to 10 json records as input and read this json data from the file line by line and store into bigquery. In this blog we will. Public abstract static class bigqueryio.read extends ptransform < pbegin, pcollection < tablerow >>. To read an entire bigquery table, use the table parameter with the bigquery table.

Google Cloud Blog News, Features and Announcements

To read an entire bigquery table, use the table parameter with the bigquery table. Main_table = pipeline | 'verybig' >> beam.io.readfrobigquery(.) side_table =. Web read files from multiple folders in apache beam and map outputs to filenames. In this blog we will. To read data from bigquery.

Apache Beam rozpocznij przygodę z Big Data Analityk.edu.pl

A bigquery table or a query must be specified with beam.io.gcp.bigquery.readfrombigquery To read an entire bigquery table, use the table parameter with the bigquery table. Web apache beam bigquery python i/o. Similarly a write transform to a bigquerysink accepts pcollections of dictionaries. Web read csv and write to bigquery from apache beam.

Apache Beam Explained in 12 Minutes YouTube

The following graphs show various metrics when reading from and writing to bigquery. The structure around apache beam pipeline syntax in python. As per our requirement i need to pass a json file containing five to 10 json records as input and read this json data from the file line by line and store into bigquery. See the glossary for.

How to submit a BigQuery job using Google Cloud Dataflow/Apache Beam?

A bigquery table or a query must be specified with beam.io.gcp.bigquery.readfrombigquery Web the default mode is to return table rows read from a bigquery source as dictionaries. See the glossary for definitions. Web using apache beam gcp dataflowrunner to write to bigquery (python) 1 valueerror: 5 minutes ever thought how to read from a table in gcp bigquery and perform.

GitHub jo8937/apachebeamdataflowpythonbigquerygeoipbatch

Web the default mode is to return table rows read from a bigquery source as dictionaries. Public abstract static class bigqueryio.read extends ptransform < pbegin, pcollection < tablerow >>. This is done for more convenient programming. The problem is that i'm having trouble. 5 minutes ever thought how to read from a table in gcp bigquery and perform some aggregation.

Apache Beam Tutorial Part 1 Intro YouTube

Union[str, apache_beam.options.value_provider.valueprovider] = none, validate: 5 minutes ever thought how to read from a table in gcp bigquery and perform some aggregation on it and finally writing the output in another table using beam pipeline? Web the runner may use some caching techniques to share the side inputs between calls in order to avoid excessive reading::: A bigquery table or.

Apache Beam チュートリアル公式文書を柔らかく煮込んでみた│YUUKOU's 経験値

Web apache beam bigquery python i/o. The following graphs show various metrics when reading from and writing to bigquery. The structure around apache beam pipeline syntax in python. In this blog we will. A bigquery table or a query must be specified with beam.io.gcp.bigquery.readfrombigquery

Apache Beam介绍

Read what is the estimated cost to read from bigquery? To read data from bigquery. I have a gcs bucket from which i'm trying to read about 200k files and then write them to bigquery. Web in this article you will learn: The structure around apache beam pipeline syntax in python.

One task — two solutions Apache Spark or Apache Beam? · allegro.tech

5 minutes ever thought how to read from a table in gcp bigquery and perform some aggregation on it and finally writing the output in another table using beam pipeline? See the glossary for definitions. The problem is that i'm having trouble. The following graphs show various metrics when reading from and writing to bigquery. Public abstract static class bigqueryio.read.

How to setup Apache Beam notebooks for development in GCP

Main_table = pipeline | 'verybig' >> beam.io.readfrobigquery(.) side_table =. The problem is that i'm having trouble. Web in this article you will learn: Web the default mode is to return table rows read from a bigquery source as dictionaries. Union[str, apache_beam.options.value_provider.valueprovider] = none, validate:

Web For Example, Beam.io.read(Beam.io.bigquerysource(Table_Spec)).

When i learned that spotify data engineers use apache beam in scala for most of their pipeline jobs, i thought it would work for my pipelines. To read data from bigquery. The problem is that i'm having trouble. This is done for more convenient programming.

Web Read Files From Multiple Folders In Apache Beam And Map Outputs To Filenames.

I am new to apache beam. Main_table = pipeline | 'verybig' >> beam.io.readfrobigquery(.) side_table =. Web the default mode is to return table rows read from a bigquery source as dictionaries. I initially started off the journey with the apache beam solution for bigquery via its google bigquery i/o connector.

The Following Graphs Show Various Metrics When Reading From And Writing To Bigquery.

How to output the data from apache beam to google bigquery. In this blog we will. Web read csv and write to bigquery from apache beam. 5 minutes ever thought how to read from a table in gcp bigquery and perform some aggregation on it and finally writing the output in another table using beam pipeline?

The Structure Around Apache Beam Pipeline Syntax In Python.

Web i'm trying to set up an apache beam pipeline that reads from kafka and writes to bigquery using apache beam. Web this tutorial uses the pub/sub topic to bigquery template to create and run a dataflow template job using the google cloud console or google cloud cli. Web using apache beam gcp dataflowrunner to write to bigquery (python) 1 valueerror: See the glossary for definitions.